| Statistics | We have 1675 registered users

The newest registered user is dejo123

Our users have posted a total of 30851 messages in 1411 subjects

|

| Who is online? | In total there are 2 users online :: 0 Registered, 0 Hidden and 2 Guests None Most users ever online was 443 on Sun Mar 17, 2013 5:41 pm |

| Latest topics | » THIS FORUM IS NOW OBSOLETE

by NickTheNick Sat Sep 26, 2015 10:26 pm by NickTheNick Sat Sep 26, 2015 10:26 pm

» To all the people who come here looking for thrive.

by NickTheNick Sat Sep 26, 2015 10:22 pm by NickTheNick Sat Sep 26, 2015 10:22 pm

» Build Error Code::Blocks / CMake

by crovea Tue Jul 28, 2015 5:28 pm by crovea Tue Jul 28, 2015 5:28 pm

» Hello! I can translate in japanese

by tjwhale Thu Jul 02, 2015 7:23 pm by tjwhale Thu Jul 02, 2015 7:23 pm

» On Leave (Offline thread)

by NickTheNick Wed Jul 01, 2015 12:20 am by NickTheNick Wed Jul 01, 2015 12:20 am

» Devblog #14: A Brave New Forum

by NickTheNick Mon Jun 29, 2015 4:49 am by NickTheNick Mon Jun 29, 2015 4:49 am

» Application for Programmer

by crovea Fri Jun 26, 2015 11:14 am by crovea Fri Jun 26, 2015 11:14 am

» Re-Reapplication

by The Creator Thu Jun 25, 2015 10:57 pm by The Creator Thu Jun 25, 2015 10:57 pm

» Application (programming)

by crovea Tue Jun 23, 2015 8:00 am by crovea Tue Jun 23, 2015 8:00 am

» Achieving Sapience

by MitochondriaBox Sun Jun 21, 2015 7:03 pm by MitochondriaBox Sun Jun 21, 2015 7:03 pm

» Microbe Stage GDD

by tjwhale Sat Jun 20, 2015 3:44 pm by tjwhale Sat Jun 20, 2015 3:44 pm

» Application for Programmer/ Theorist

by tjwhale Wed Jun 17, 2015 9:56 am by tjwhale Wed Jun 17, 2015 9:56 am

» Application for a 3D Modeler.

by Kaiju4u Wed Jun 10, 2015 11:16 am by Kaiju4u Wed Jun 10, 2015 11:16 am

» Presentation

by Othithu Tue Jun 02, 2015 10:38 am by Othithu Tue Jun 02, 2015 10:38 am

» Application of Sorts

by crovea Sun May 31, 2015 5:06 pm by crovea Sun May 31, 2015 5:06 pm

» want to contribute

by Renzope Sun May 31, 2015 12:58 pm by Renzope Sun May 31, 2015 12:58 pm

» Music List Thread (Post New Themes Here)

by Oliveriver Thu May 28, 2015 1:06 pm by Oliveriver Thu May 28, 2015 1:06 pm

» Application: English-Spanish translator

by Renzope Tue May 26, 2015 1:53 pm by Renzope Tue May 26, 2015 1:53 pm

» Want to be promoter or project manager

by TheBudderBros Sun May 24, 2015 9:00 pm by TheBudderBros Sun May 24, 2015 9:00 pm

» A new round of Forum Revamps!

by Oliveriver Wed May 20, 2015 11:32 am by Oliveriver Wed May 20, 2015 11:32 am

|

|

| | Crash Course into AI |  |

|

+3gdt1320 NickTheNick Daniferrito 7 posters | |

| Author | Message |

|---|

Daniferrito

Experienced

Posts : 726

Reputation : 70

Join date : 2012-10-10

Age : 30

Location : Spain

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Sat Dec 08, 2012 8:54 pm Sat Dec 08, 2012 8:54 pm | |

| Yes, sorry, I've been neglecting this. The problem I have is that I went way too fast in the first post, so I'm not really sure how to do this. Anyway, here I go on the second part, where I improve a bit the way we calculate the best action to take. The third part will be about the problems I see we could have, and some possible options. Last time, we had a state s, from where we took an action a to end in state s' (the notation for the successor state). Then, we would choose wich action to take depending in the values we calculated of s'. The problem is that we need to know this s', which we can't fully simulate because all the random and unknown variables in the equation. What we can do is taking all the calculations "half a step backwards", to a point were we know all the variables. That point is still in state s (the one we are on), but to the point were we have comited to taking a specific action. Then instead of having V(s') (expected reward from state s'), we have Q(s,a) (expected reward from state s if we commited into taking action a). - Spoiler:

Here should be an image about this shift

This makes choosing the action to take easier, as we only have to take the Q(s,a) that is bigger. But the equations change a bit. In order to calculate Q(s,a), we do Q(s,a)= W1*F1(s,a)+W2*F2(s,a)+...+Wn*Fn(s,a) (I would use the sumatory symbol, but I dont think it is suported here, and I think it could work better, altrough harder to code, if they are not all simply added up). The other thing we need is to update the weights as we recieve the rewards. For any given F(s,a), once we recieve the reward r and land in the state s', we have: W = W + alpha*(correction)*F(s,a), with the correction = (r+max(Q(s',a')))-Q(s,a). The correction is the difference between the reward we just got and the one from future states, starting from s' (r+max(Q(s',a'))) the expected reward Q(s,a). max(Q(s',a')) means that we put there the maximum expected reward from s', and it assumes that we act optimally afterwards. The rest is the same as last time. Recapping, this changes allow us to compute this algorithm without the agent knowing the exact output of its actions (althrough it still needs some knowledge about them). You can also note that this time I could write the exact way of computing the correction to apply to the weights, as only using the states makes it harder. Now we can also use the action we are taking in the features. Again, thanks for reading and please ask for whatever you didnt understaund or want me to explain further. | |

|   | | ~sciocont

Overall Team Lead

Posts : 3406

Reputation : 138

Join date : 2010-07-06

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Tue Dec 11, 2012 12:05 am Tue Dec 11, 2012 12:05 am | |

| | |

|   | | NickTheNick

Overall Team Co-Lead

Posts : 2312

Reputation : 175

Join date : 2012-07-22

Age : 28

Location : Canada

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Tue Dec 11, 2012 12:08 am Tue Dec 11, 2012 12:08 am | |

| Great work Daniferrito. Unfortunately this is where my knowledge of maths ends, so I can't really comment, but wonderful job nonetheless. | |

|   | | Daniferrito

Experienced

Posts : 726

Reputation : 70

Join date : 2012-10-10

Age : 30

Location : Spain

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Tue Dec 11, 2012 12:03 pm Tue Dec 11, 2012 12:03 pm | |

| Ok, third part, and the first one I will need help on. We are going to talk about the functions that describe the state-action pair. The basic gist on them is that the more important they should be to the agent, the higher they should be. It doesen't matter if they are good or bad, if they are possitive or negative big, the algorith will handle that, but once they are not important, they should go towards 0. They shouldn't go to infinity either at any point either (Actually, any function will work, and the agents will do their best to maximize rewards, but choosing good functions makes it easier to the algorithm to e closer to optimality). Lets look at an example, to see what I mean. For this example we'll use distance to something. The function will fe refered as F(s,a), and d will be the distance from the agent to the thing after the action has been aplied. It is easy to see that the closer something is, it is more important to us. The easiest thing to do would be to use F(s,a) = d. Lets see how that looks: For all graphs, X axis (horizontal) represents d, with the the possitive side to the right, and the Y axis (vertical) represents F(s,a), with the possitive side going up. The axis are in black, and the values in blue. Where the axis connect is (0,0). - Spoiler:

As you can see, when the agent is close to the thing, the function will have a low value, and as it gets further from it, it goes up. That doesen't look like what we wanted, as it doesen't go to 0 when it is no longer important. Lets try F(s,a) = 1/d: - Spoiler:

Now it looks better. When we get closer to the thing, the function goes up, meaning we should care more about it. However, as it goes to infinity as the distance goes to 0, the agent will prefer to get as close to it as possible (if it is maximizing it, as with food), overriding any other function. It would still work, but it would behave funny when getting really close to the thing. We can solve this by doing F(s,a) = 1/(d+1): - Spoiler:

Now, we have a bigger F(s,a) when it is more meaningfull to the agent, and we dont get to infinity ever (at least in the section of d that is fisically possible, as we can't have negative distances). Not all the functions need to be expressed as a continuous function. For example, we can have a function that contains if we are eating, and it has a value of 1 if the agent is eating and a value of 0 if it isn't. So what needs to be discussed is the set of functions that we need to encode the state-action pair, that is, all meaningfull aspects need to have a function. I suggest to brainstorm them here. Edit: Remember, we need to be able to code them. | |

|   | | Daniferrito

Experienced

Posts : 726

Reputation : 70

Join date : 2012-10-10

Age : 30

Location : Spain

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Tue Dec 11, 2012 5:37 pm Tue Dec 11, 2012 5:37 pm | |

| A word about distances, using the raw distance (a straight line) won't work too well with agents, we need to use real walking distance (or an aproximation of it). Let's see an example of what i mean: This is a pacman board (althrough it looks lke a maze) that i made in paint. The yellow dot on the bottom represents the food pellet we are trying to get to. If we use staight distance, the agent (pacman) would prefer to go to the positions with lower numbers on them: - Spoiler:

That means that from its starting possition, it would go left or right, as they both have a 2. From there, it would go back to its starting possition, as it would prefer the 1 over the 3. And it would stay there forever. However, if we use real maze distance, we would have this situation - Spoiler:

Now, it has 2 possible places to go to, a 8 and a 10. It would choose 8, from there, the 7, then 6,... until it finally gets to the food and wins the game. Calculating the real distance is much harder than just using the straight distance, but it is mandatory if you dont want agents walking towards a wall because there is sonething they are interested in on the other side. | |

|   | | NickTheNick

Overall Team Co-Lead

Posts : 2312

Reputation : 175

Join date : 2012-07-22

Age : 28

Location : Canada

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Wed Jan 16, 2013 2:13 am Wed Jan 16, 2013 2:13 am | |

| How would we define the paths that the agent takes in a dynamic environment?

Also, what else is there to cover in terms of AI. I want to try to revive this thread, as it is very useful and has seemed to die down recently. | |

|   | | Daniferrito

Experienced

Posts : 726

Reputation : 70

Join date : 2012-10-10

Age : 30

Location : Spain

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Wed Jan 16, 2013 7:55 am Wed Jan 16, 2013 7:55 am | |

| For pathfinding, i was thinking of something similar to this video. At least for the 3-d world. For microbe, nothing is needed.

The second problem is what exact path to go. In a grid world, we only have 4 possible directions. In 2d, we have the whole circumference. We have to give the AI agent a few options to choose from, so we have to limit its valid options.

Other than that, the other thing i can think of are the functions they need to maximize, as i explained a few posts befor this one. Or at least, what things should AI care about. | |

|   | | ido66667

Regular

Posts : 366

Reputation : 5

Join date : 2011-05-14

Age : 110

Location : Space - Time

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Wed Jan 16, 2013 10:05 am Wed Jan 16, 2013 10:05 am | |

| Are you sure we need to use too much math... For example we can use simple stuff: Like radius, we can give the cell a certain radius, and that we can just do something like the following (But Much more complex!) (Pseudo-code): - Code:

-

if (CellDistenceToFood < 6)

{

int EatOrNot = rand() % 100 + 1;

if (EatOrNot > 75)

{

CellOmNomNoms_Object();

}

else

{

CellNaaa2();

}

}

else

{

CellNaaaa1();

}

| |

|   | | Daniferrito

Experienced

Posts : 726

Reputation : 70

Join date : 2012-10-10

Age : 30

Location : Spain

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Wed Jan 16, 2013 11:54 am Wed Jan 16, 2013 11:54 am | |

| We could use that for simple scenarios, but that would fall flat when dealing with multiple things at the same time. And yes, we need to use maths.

The problem with your way is that you can't acount for anything you dont know at programming time. And as we are aiming for procedurally generating everything, there is very little we know at programming time. | |

|   | | ido66667

Regular

Posts : 366

Reputation : 5

Join date : 2011-05-14

Age : 110

Location : Space - Time

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Wed Jan 16, 2013 12:07 pm Wed Jan 16, 2013 12:07 pm | |

| - Daniferrito wrote:

- We could use that for simple scenarios, but that would fall flat when dealing with multiple things at the same time. And yes, we need to use maths.

The problem with your way is that you can't acount for anything you dont know at programming time. And as we are aiming for procedurally generating everything, there is very little we know at programming time. First, that could be used in a prototype. Second, I don't know much about AOP, but I believe we can use it for more than one thing like you plan to use the "Eat Or not Function", Basicly, I believe that that function and my if/else do basicly the same thing. By saying that I think we don't need to use to much math I meant that I don't think we need mathematical functions in here... your function basicly says that when the getting closer to the food it the chances it will eat it are bigger, speaking of that, if we really think about it, our ideas a more or less the same, after all, your function will probably use a bunch of lf/else or case/switch statements and will act as an agent. Also, can you be more specific about the last sentence? | |

|   | | Daniferrito

Experienced

Posts : 726

Reputation : 70

Join date : 2012-10-10

Age : 30

Location : Spain

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Wed Jan 16, 2013 1:06 pm Wed Jan 16, 2013 1:06 pm | |

| Ok, if statements for AI.

Let's assume you have an AI that runs only on if statements. For example, if there is food nearby, eat the food. For one element, we have one if statement.

If we add a second element into the equation, for example a predator, things get a bit more complicated. If there is food nearby, eat the food. If there is a predator nearby, run away from the predatod. Now, what happens when there is food and a predator, and both statements colide? We need a third if statement for that case. If there is food and a predator nearby, run away from the predator. Two elements, three if statements. You can alredy see where is the problem.

Lets go one step ahead. Now, we have a third element, oxigen, which the cell must gather. Other than the previous if statements (3 of them), we now need another that says: if there is oxigen nearby, go for the oxigen. Again, we have if that can colide, so we need extra ifs. if there is food and oxigen nearby, get the oxigen. If there is oxigen and a predator nearby, run away. If there is food, oxygen, and a predator nearby, fight the predator. One extra element, 4 extra ifs. now we are at 7.

The more you add, the more ifs you will need. if for one level of complexity you need n if statements, if you want to add an extra element, you will now need 2n +1 statements.

Of course you can simplify some of the ifs, but the problem is still there. Amount of if statements will grow exponentially.

However, with weighted functions, if you want to add an extra element, you just have to add the equation for it. Also, as it is a learning agent, it will behave like normal animals, learning what is good and what is bad on the go.

One more thing. Your If agent doesent care at all about food outside its "i want that food" radius. Mine does. If there is nothing around that is significant to the cell, it would go towards the nearest food.

i believe this is long enough for now. I will get back on this later, especially if you are still sceptical of the if agent being non practical. | |

|   | | ido66667

Regular

Posts : 366

Reputation : 5

Join date : 2011-05-14

Age : 110

Location : Space - Time

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Thu Jan 17, 2013 8:58 am Thu Jan 17, 2013 8:58 am | |

| - Daniferrito wrote:

- Ok, if statements for AI.

Let's assume you have an AI that runs only on if statements. For example, if there is food nearby, eat the food. For one element, we have one if statement.

If we add a second element into the equation, for example a predator, things get a bit more complicated. If there is food nearby, eat the food. If there is a predator nearby, run away from the predatod. Now, what happens when there is food and a predator, and both statements colide? We need a third if statement for that case. If there is food and a predator nearby, run away from the predator. Two elements, three if statements. You can alredy see where is the problem.

Lets go one step ahead. Now, we have a third element, oxigen, which the cell must gather. Other than the previous if statements (3 of them), we now need another that says: if there is oxigen nearby, go for the oxigen. Again, we have if that can colide, so we need extra ifs. if there is food and oxigen nearby, get the oxigen. If there is oxigen and a predator nearby, run away. If there is food, oxygen, and a predator nearby, fight the predator. One extra element, 4 extra ifs. now we are at 7.

The more you add, the more ifs you will need. if for one level of complexity you need n if statements, if you want to add an extra element, you will now need 2n +1 statements.

Of course you can simplify some of the ifs, but the problem is still there. Amount of if statements will grow exponentially.

However, with weighted functions, if you want to add an extra element, you just have to add the equation for it. Also, as it is a learning agent, it will behave like normal animals, learning what is good and what is bad on the go.

One more thing. Your If agent doesent care at all about food outside its "i want that food" radius. Mine does. If there is nothing around that is significant to the cell, it would go towards the nearest food.

i believe this is long enough for now. I will get back on this later, especially if you are still sceptical of the if agent being non practical. Do you have any other way to implent it? f(x, y) = ... Is not a part of c++0x standard. We can't just go around saying to the PC "I command you to use your thingy and create me my wighted function, and than magicly use it on some virtual cells."... Also, we can thread add more ifs. The fact that something is long doen't mean one can't make it. Also, I don't plan to add n elements. Your agent have infinte abillity to spot food? BTW, do have a plan how to create that learning agent? P.S. c++ is not based on AOP so don't think that agents can solve everything... Thery are just useful tools. Also our project will heavely use OOP. | |

|   | | Daniferrito

Experienced

Posts : 726

Reputation : 70

Join date : 2012-10-10

Age : 30

Location : Spain

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Thu Jan 17, 2013 11:21 am Thu Jan 17, 2013 11:21 am | |

| Every single programing language that i know of can do something like f(x, y), whether you refer to having two input argunents or two outputs (althrough the two outputs are a bit harder in some languages). All the way from the lowest level (assembly language, like mips) to the highest levels (like python). Of course we have to give the computer the functions. What we dont need to give is the weights. It will learn those by himself, depending on the rewards it gets. Basically, what it does, is: for every possible action (we give him the actions he is alowed to do in a determined state, i'm just asking help about this point), calculate the value of the weighted functions, multiply each one by its weight, and choose the output with the highest total value. In order to learn the weights, and extra step is needed. Each time an action finishes, it recieves a reward (probably in the shape of food, or any other thing). Eating gives lots of food, and moving takes away some food (negative reward). Then, it adjusts every weight. Wi = Wi + alpha*(correction)*Fi(s,a), with the correction = (r+max(Q(s',a')))-Q(s,a). Wi is the weight number i, and Fi is the weighted function number i (they are suposed to be linked). - Quote :

- Also, we can thread add more ifs.

I dont get it. And yes, i have a plan on how to create that learning agent. It's here. And i have alredy created one (a pacman agent). And aspect-oriented programming is a particular vision on object-oriented programming, but it is still object-oriented programming. I dont see how it affects this, through. | |

|   | | ido66667

Regular

Posts : 366

Reputation : 5

Join date : 2011-05-14

Age : 110

Location : Space - Time

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Thu Jan 17, 2013 1:31 pm Thu Jan 17, 2013 1:31 pm | |

| - Daniferrito wrote:

- Every single programing language that i know of can do something like f(x, y), whether you refer to having two input argunents or two outputs (althrough the two outputs are a bit harder in some languages). All the way from the lowest level (assembly language, like mips) to the highest levels (like python).

Of course we have to give the computer the functions. What we dont need to give is the weights. It will learn those by himself, depending on the rewards it gets.

Basically, what it does, is: for every possible action (we give him the actions he is alowed to do in a determined state, i'm just asking help about this point), calculate the value of the weighted functions, multiply each one by its weight, and choose the output with the highest total value.

In order to learn the weights, and extra step is needed. Each time an action finishes, it recieves a reward (probably in the shape of food, or any other thing). Eating gives lots of food, and moving takes away some food (negative reward). Then, it adjusts every weight. Wi = Wi + alpha*(correction)*Fi(s,a), with the correction = (r+max(Q(s',a')))-Q(s,a). Wi is the weight number i, and Fi is the weighted function number i (they are suposed to be linked).

- Quote :

- Also, we can thread add more ifs.

I dont get it.

And yes, i have a plan on how to create that learning agent. It's here. And i have alredy created one (a pacman agent).

And aspect-oriented programming is a particular vision on object-oriented programming, but it is still object-oriented programming. I dont see how it affects this, through. First, we can't say vague or very abstract things in functions, so probably in c++ it will come down to if, else, goto, switch, case, break, while, do-while, for, continue and exit. Second, by "No f=(x, y)" I meant, no purely mathematical functions, it must contain some control statements and BTW, if you convert the math notation to C++ x and y in the declaration will be just arguments... Third, I meant we can nest if and else or any other control statements. The above can be fixed and we could covert your model to real C++ except: Now, your learning model can work very well in pacman, so feel free, but in thrive it will encounter some fundamental issues, I will explain it in the dry way, and than with some analogy: 1. Due to the fact that computers and other machines can't think very abstractly, very vaguely, philosophically and don't have any biological impulse to breed, survive, eat, etc. they don't have any sense of what is "bad" and what is "good" for a biological creature (Survive, breed, eat...) therefore to have any good learning AI the programmer must first, define what is good for the cell and what is bad for it, and in thrive alot of things can happen, so we can't just define what is "good" and what is "bad" for every possible situation, or else when some cell encounter one of this situations, the AI will stop working and will not respond to that situation. 2. Now we have another problem, the computer don't know to connect and understand any connection between events, one can't just define that dying is "bad", and than the computer will just avoid stuff that have high chance of leading to death... The programmer will need to define what is "bad" and what is "good" specificity in any situation and in details, and than we are back to 1... 3. Cells and non - sentient creatures that don't have a brain don't really "learn", they "learn" through neutral selection, because the individuals that act "dumb" die, and the ones who act "smart" thrive, because of that, to have a good learning AI, we need to apply auto - evolution on the AI itself, that will make things much more complex... creatures that have a brain, but are not very intelligent really learn some things, but other things they "learn" like cells. 4. We treat cell species as a whole (Seregon's population dynamics and compound system), except the individuals that are near the player, so a really good AI won't have much affect on the player... except if we will apply the AI to whole species (Making things very complex). That fundamental problem was once addressed (In much less polite manner than how I address now) by banshee (A very talented programmer) in his last thread (similar to roadkillguy's last thread(Also a talented programmer)) but in the context of auto-evo that was than ill - formulated. Analogy time: Programmers are not like "Horse whisperers", but are like normal horse riders, they can't whisper to the horse vague and very abstract commands and the horse will do so... They have to give the horse specific commands... But computers are much more "dumb" than horses. P.S. AOP is in fact a paradigm it self, but in c++ it is used with OOP, much like functional programming, we can use functions in classes, but that doesn't mean that functions originated in OOP... I said it just so you won't get too "excited" with Agents. | |

|   | | Daniferrito

Experienced

Posts : 726

Reputation : 70

Join date : 2012-10-10

Age : 30

Location : Spain

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Thu Jan 17, 2013 4:31 pm Thu Jan 17, 2013 4:31 pm | |

| 1 - Yes, but we can tell them to maximize a value. From a given state, each action will have a value, and the agent will perform the action with the greatest value. Those values are calculated like so: Q(s,a) = W1*F1(s,a)+W2*F2(s,a)+...+Wn*Fn(s,a) Fn(s,a) are the things that we're discusing right now. They are functions that we code in before-hand. - Spoiler:

let's say we only have one function, defined like so:

F1(s,a) = 1/1+distanceToClosestFood

And its weight (W1) is something possitive, like 1.

The agent will calculate all Q(s,a), wich are predictions on what will happen if it tries to perform action a. For all given actions, it can calculate where will it finish after the action is done. From there, it can calculate distanceToClosestFood, plug that value into F1(s,a), and calculate the resoult. Then it compares all Q(s,a), and return the action whose Q(s,a) is higher.

Long story short: the agent will conclude that the best action is the one that brings it closer to the nearest food.

2 - The computer learns the conections. Thats what the second part of the algorithm is there for. Its the part that learns. Let me explain: When an agent gets a reward, all weights are adjusted, and the quantity of the adjustement is determined by how big its function was at the moment. For example, a cells passes through a bit of food. Right when it is on top, it recieves a positive reward. Then, all weights are adjusted on this. The weighted functions will look like this: F1 (food) = 1/1+distanceToClosestFood = 1/1+0 = 1 -> W1+=alpha*reward*F1 = alpha*reward*1 F2 (enemy) = 1/1+distanceToClosestEnemy = 1/1+99 = 0.01 -> W2+=alpha*reward*F2 = alpha*reward*0.01 That means that both W1 and W2 will go up, but W1 will go up 100 times more. In the case of an enemy eating the cell, it will look similar, but now the reward will be negative, and as F2 will be higher to F1, W2 will go down much faster than W1. This way, the agent is effectively learning that being close to food is good, and being close to enemies is bad. It is effectively learning the conection between being close to food and eating. 3 - We have alredy discussed this a bit (around post 11 on this thread, between scio and me), and have concluded that all agents from the same specie will share weights (W). This effectivelly means some sort of racial memory, or behavior. It is a bit innacurate, but it is the closer we can get. - Spoiler:

The ideal situation would be to have separated weight for all individuals, that are recieved on birth with a little randomization, and then the ones that randomly get more suitable weights survive to pass those traits to its childrens. However, that would require to have thousand (if not more) individuals, each one being unique and having to store it in memory separatedly.

4 - Same as 3 You mean Bashinerox in its thread Why Auto-Evo is Dead? I kind of agree with that thread, but i dont think this is the same thing. The reasons are in this thread. This system is well defined. This system has alredy been done succesfully. | |

|   | | ido66667

Regular

Posts : 366

Reputation : 5

Join date : 2011-05-14

Age : 110

Location : Space - Time

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Thu Jan 17, 2013 7:13 pm Thu Jan 17, 2013 7:13 pm | |

| - Daniferrito wrote:

- 1 - Yes, but we can tell them to maximize a value. From a given state, each action will have a value, and the agent will perform the action with the greatest value. Those values are calculated like so:

Q(s,a) = W1*F1(s,a)+W2*F2(s,a)+...+Wn*Fn(s,a)

Fn(s,a) are the things that we're discusing right now. They are functions that we code in before-hand.

- Spoiler:

let's say we only have one function, defined like so:

F1(s,a) = 1/1+distanceToClosestFood

And its weight (W1) is something possitive, like 1.

The agent will calculate all Q(s,a), wich are predictions on what will happen if it tries to perform action a. For all given actions, it can calculate where will it finish after the action is done. From there, it can calculate distanceToClosestFood, plug that value into F1(s,a), and calculate the resoult. Then it compares all Q(s,a), and return the action whose Q(s,a) is higher.

Long story short: the agent will conclude that the best action is the one that brings it closer to the nearest food.

2 - The computer learns the conections. Thats what the second part of the algorithm is there for. Its the part that learns. Let me explain:

When an agent gets a reward, all weights are adjusted, and the quantity of the adjustement is determined by how big its function was at the moment. For example, a cells passes through a bit of food. Right when it is on top, it recieves a positive reward. Then, all weights are adjusted on this.

The weighted functions will look like this:

F1 (food) = 1/1+distanceToClosestFood = 1/1+0 = 1 -> W1+=alpha*reward*F1 = alpha*reward*1

F2 (enemy) = 1/1+distanceToClosestEnemy = 1/1+99 = 0.01 -> W2+=alpha*reward*F2 = alpha*reward*0.01

That means that both W1 and W2 will go up, but W1 will go up 100 times more.

In the case of an enemy eating the cell, it will look similar, but now the reward will be negative, and as F2 will be higher to F1, W2 will go down much faster than W1.

This way, the agent is effectively learning that being close to food is good, and being close to enemies is bad. It is effectively learning the conection between being close to food and eating.

3 - We have alredy discussed this a bit (around post 11 on this thread, between scio and me), and have concluded that all agents from the same specie will share weights (W). This effectivelly means some sort of racial memory, or behavior. It is a bit innacurate, but it is the closer we can get.

- Spoiler:

The ideal situation would be to have separated weight for all individuals, that are recieved on birth with a little randomization, and then the ones that randomly get more suitable weights survive to pass those traits to its childrens. However, that would require to have thousand (if not more) individuals, each one being unique and having to store it in memory separatedly.

4 - Same as 3

You mean Bashinerox in its thread Why Auto-Evo is Dead? I kind of agree with that thread, but i dont think this is the same thing. The reasons are in this thread. This system is well defined. This system has alredy been done succesfully. Your system is good, but we need to define every state and action, so the computer will be able to make the connection between the reward and the action. Also, does it have any affect on Population dynamics system? | |

|   | | Daniferrito

Experienced

Posts : 726

Reputation : 70

Join date : 2012-10-10

Age : 30

Location : Spain

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Thu Jan 17, 2013 7:34 pm Thu Jan 17, 2013 7:34 pm | |

| We dont have to define each state and action pair. That what the weighted functions (features) are for. We extract from there the things interenting to us and plug them into the functions (if we have a state, its really easy to calculate the distance from the cell we care about to the nearest food). Then, each state is simplified to these features. We only have to tell him how to extract those features.

And no, as far as i know, this doesen't affect population dynamics, althrough i think there is some way they could work together. For example, looking at the weights that link diferent species, we can determine how different species interact with each other.

for example:

Species A, that could feed on species B, just doesent even try to hunt species B because species B learnt to run away, and species A cant run as fast, and so, trying to hunt them is a waste of energy.

I hope that wasnt too obscure (can't find a better word in english) | |

|   | | ido66667

Regular

Posts : 366

Reputation : 5

Join date : 2011-05-14

Age : 110

Location : Space - Time

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Thu Jan 17, 2013 7:44 pm Thu Jan 17, 2013 7:44 pm | |

| - Daniferrito wrote:

- We dont have to define each state and action pair. That what the weighted functions (features) are for. We extract from there the things interenting to us and plug them into the functions (if we have a state, its really easy to calculate the distance from the cell we care about to the nearest food). Then, each state is simplified to these features. We only have to tell him how to extract those features.

And no, as far as i know, this doesen't affect population dynamics, althrough i think there is some way they could work together. For example, looking at the weights that link diferent species, we can determine how different species interact with each other.

for example:

Species A, that could feed on species B, just doesent even try to hunt species B because species B learnt to run away, and species A cant run as fast, and so, trying to hunt them is a waste of energy.

I hope that wasnt too obscure (can't find a better word in english) I get it now, thanks for clearification | |

|   | | NickTheNick

Overall Team Co-Lead

Posts : 2312

Reputation : 175

Join date : 2012-07-22

Age : 28

Location : Canada

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Thu Jan 17, 2013 10:39 pm Thu Jan 17, 2013 10:39 pm | |

| Now this level of the conversation may be beyond my understanding, but I do have some comments to make. - ido66667 wrote:

- Now, your learning model can work very well in pacman, so feel free, but in thrive it will encounter some fundamental issues,

Ido, please, refrain from being condescending. - ido66667 wrote:

- 3. Cells and non - sentient creatures that don't have a brain don't really "learn", they "learn" through neutral selection,

Bear in mind that creatures can learn basic concepts. For example, if a cell goes near another cell and gets eaten, it will learn not to go near that cell. Also, I couldn't ignore this, it is called Natural Selection. - ido66667 wrote:

- That fundamental problem was once addressed (In much less polite manner than how I address now) by banshee (A very talented programmer)

I wouldn't call your explanation that much more polite. You were quite rude in certain areas, not just in this post but earlier ones, and you omitted to read many of Dani's earlier posts. By the way, his name is Bashinerox, not banshee. - ido66667 wrote:

- Analogy time:

Programmers are not like "Horse whisperers", but are like normal horse riders, they can't whisper to the horse vague and very abstract commands and the horse will do so... They have to give the horse specific commands... But

computers are much more "dumb" than horses. Ido, you are a relative beginner to programming, not to say I am any better myself. Posting analogies and jokes pertaining to code don't increase our perception of your capabilities. It is also slightly offensive, I would think, to explain to Dani how coding works in such a simple way when he is clearly a more experienced programmer than yourself. Remember what I've said, we appreciate your contributions only so long as you refrain from becoming insulting, or start asserting your knowledge of whatever fields onto others. | |

|   | | Ionstorm

Newcomer

Posts : 11

Reputation : 1

Join date : 2013-01-17

Location : Cambridge

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Fri Jan 18, 2013 2:17 pm Fri Jan 18, 2013 2:17 pm | |

| From what I see here I think that one way of implementing this system in code would be to have one AI manager that controls the AI and an species class that stores weights of goals.

Like

AiMan->Speices

World->Update->BannanaSlug->Update->Find closest object with highest weight with distance having exponent falloff in control e.g. close objects have a much higher control even if less important weight wise.

There must be more ways than this but this is all I can think of. | |

|   | | ido66667

Regular

Posts : 366

Reputation : 5

Join date : 2011-05-14

Age : 110

Location : Space - Time

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Fri Jan 18, 2013 3:19 pm Fri Jan 18, 2013 3:19 pm | |

| - NickTheNick wrote:

- Now this level of the conversation may be beyond my understanding, but I do have some comments to make.

- ido66667 wrote:

- Now, your learning model can work very well in pacman, so feel free, but in thrive it will encounter some fundamental issues,

Ido, please, refrain from being condescending.

- ido66667 wrote:

- 3. Cells and non - sentient creatures that don't have a brain don't really "learn", they "learn" through neutral selection,

Bear in mind that creatures can learn basic concepts. For example, if a cell goes near another cell and gets eaten, it will learn not to go near that cell.

Also, I couldn't ignore this, it is called Natural Selection.

- ido66667 wrote:

- That fundamental problem was once addressed (In much less polite manner than how I address now) by banshee (A very talented programmer)

I wouldn't call your explanation that much more polite. You were quite rude in certain areas, not just in this post but earlier ones, and you omitted to read many of Dani's earlier posts.

By the way, his name is Bashinerox, not banshee.

- ido66667 wrote:

- Analogy time:

Programmers are not like "Horse whisperers", but are like normal horse riders, they can't whisper to the horse vague and very abstract commands and the horse will do so... They have to give the horse specific commands... But

computers are much more "dumb" than horses.

Ido, you are a relative beginner to programming, not to say I am any better myself. Posting analogies and jokes pertaining to code don't increase our perception of your capabilities. It is also slightly offensive, I would think, to explain to Dani how coding works in such a simple way when he is clearly a more experienced programmer than yourself.

Remember what I've said, we appreciate your contributions only so long as you refrain from becoming insulting, or start asserting your knowledge of whatever fields onto others. Look, while he is better than me, that does't take away my rights to ask/criticise or question his things as long as I don't use personal attacks (Argue ageinst a proposition by attacking )... I questioned his proportion not him. I used analogies and jokes because this is my way to explaine things, if one my analogies is wrong feel free the to point it out... If you don't find my jokes funny, that just says you have a different taste of humor, and that okay. I thought that there was a fundamental problem, he explained, and I said that I was wrong. I think that right now you qustioned me, myself... Not what my Arguements was, I also feel (Correct me if I am wrong) that you suggest that I had a bad intent in mind, in fact when I written this post, I tried to avoid any insulting stuff, if I did inaulted Dani, I am sorry. Not to mention that is completly off topic and to avoid "Threadjacking" this and if you want to continue this unrealated argument PM me. | |

|   | | NickTheNick

Overall Team Co-Lead

Posts : 2312

Reputation : 175

Join date : 2012-07-22

Age : 28

Location : Canada

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Fri Jan 18, 2013 6:41 pm Fri Jan 18, 2013 6:41 pm | |

| - ido66667 wrote:

- as long as I don't use personal attacks (Argue ageinst a proposition by attacking )...

Good, but you were bordering on it. - ido66667 wrote:

- If you don't find my jokes funny, that

just says you have a different taste of humor, and that okay. It's not to do with the taste of the joke, it was the intent behind it. - ido66667 wrote:

- in fact when I written this post, I tried to

avoid any insulting stuff, if I did inaulted Dani, I am sorry. Good to see your intentions are pure. Apologies are always appreciated. - ido66667 wrote:

- to avoid "Threadjacking"

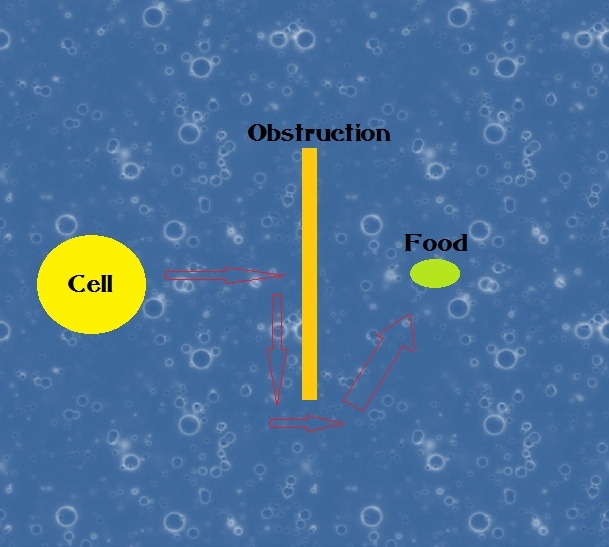

this and if you want to continue this unrealated argument PM me. Do not try to dismiss this, it is pertaining to you and is important to address. @Dani: How would the path-finding of the AI deal with a dynamic environment? Also, how would they retain more natural paths? For example, if a cell senses some food behind an obstruction, it will normally walk into the obstruction, then slide to the left or right along the obstruction until it is no longer in the way and then it goes straight for the food. This is because AI pathfinding usually prevents them from backtracking. - Spoiler:

However, with a more natural pathfinding system, the cell takes a route around the wall, without just walking into it and sliding across it, and then curves around and goes for the food. - Spoiler:

Would this work with the pacman system? | |

|   | | Ionstorm

Newcomer

Posts : 11

Reputation : 1

Join date : 2013-01-17

Location : Cambridge

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Fri Jan 18, 2013 7:50 pm Fri Jan 18, 2013 7:50 pm | |

| Not meaning to butt in here but Nick the first example used a sub-par path finding system by using heuristics( rule of thumb) to work out a fast way of calculating the path - by working along walls to decrease the processing power needed.

The second one shows a non optimized method that could be found using an a* path finding algorithm or a navigation mesh with spline curves.

For example with an a* Path finding algorithmic you could have a grid that you could walk on, then when anything sits on top it in game sets points on the grid you can't go to.

With a navigation mesh you change the navigation mesh on the fly and then you work out a path that stays within your movable area.

In summary you could have the nice curved moment path but i would use more processing power as the computer would need to work out more extensive options of paths is could follow then choose the nice curvy one.

I hope this gives an idea.

Over to you dani | |

|   | | Daniferrito

Experienced

Posts : 726

Reputation : 70

Join date : 2012-10-10

Age : 30

Location : Spain

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Fri Jan 18, 2013 9:19 pm Fri Jan 18, 2013 9:19 pm | |

| Well, I actually though that we didnt need any pathfinding for cell stage. Other than other cells (wich are more-or-less covered by basic AI), what else can get in the way of a cell?

Anyway, if we need pathfinding, the AI algorith only needs the distance (the real distance, having to go around obstacles), not the exact path. However, in order to know the real distance, we need to calculate the path.

Unless the environement is really, really simple, just a heuristic (what is called a greedy algorythm) wont make a good search algorithm for pathfinding. It gets stuck in places too much (In nick's example, it would only try to go through the obstacle, not around it). We need some kind of A*. I personally dont know hoy A* works with anything other than a discrete space, but we can easily simplify our problem to a discrete space. I never heard about spline curves before, and even less on how can they be integrated into pathfinding.

About dynamic environement, the easiest way would be just to calculate the path for the current environement, and recalculate it again when it changes (we actually need to recalculate it anyway)

What a pathfinding algorithm usually finds is the second option.

I believe that there is alredy some libraries that can do this for us, as it is a very usual problem.

Actually, Ido, I love that you sugest things. Actually, to be fair, something like your idea would the best AI for the first release of microbe stage. The only problem is scalability (each element added is increasingly harder) and that you must know all the things from before (for a pair of somewhat evolved cells, you dont know beforehand how they would interact, and if cell A shoud fear cell B, or if it should be the other way around). Please, keep adding ideas. | |

|   | | Ionstorm

Newcomer

Posts : 11

Reputation : 1

Join date : 2013-01-17

Location : Cambridge

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  Sat Jan 19, 2013 6:56 am Sat Jan 19, 2013 6:56 am | |

| Ok, yes dani what about other objects in the space like food clumps that could be in the way that it has to navigate around.

The way an a* search could work is by having the objects occupying a position in the world that would be turned into an bounding box esque obstacle on the mesh like:

X = 2.5 of object

Y = 5.3 of object

then take these coordinates and then take the size and turn that into a grid space of

with 0s free space and 1s occupied in this example

000000 the grid that the a* works on

001100

000000

000000

then moving onto

000000

000110

000000

000000

and so forth.

but with something like a navigation mesh with an area that you can move in, you find points that have a fast way of getting to the target then send that through a splining algorithm that just smooths it out into a bendy line and then check if it still occupies the nav mesh and then your good to go.

Remember google is your best friend | |

|   | | Sponsored content

|  Subject: Re: Crash Course into AI Subject: Re: Crash Course into AI  | |

| |

|   | | | | Crash Course into AI |  |

|

Similar topics |  |

|

| | Permissions in this forum: | You cannot reply to topics in this forum

| |

| |

| |

by

by